| Device | Modalities | Frequency(Hz) | ||||||

| camera: Orbbec Astra Pro |

|

|

||||||

| earbud: eSense (Nokia Bell-Labs) |

|

|

||||||

| 2x smartwatches: Mobvoi TicWatch Pro |

|

|

||||||

| smartphone: Samsung S7 |

|

|

||||||

| smartphone: Huawei P20 |

|

|

From 5 time-synchronised sensing devices.

|

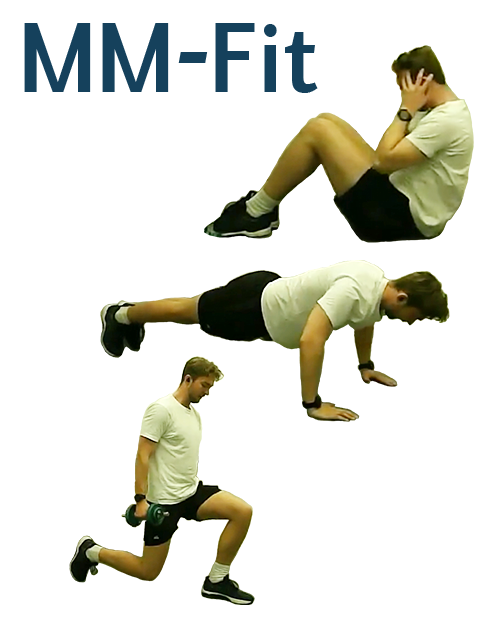

SquatsThe body is lowered at the hips from a standing position and then stands back up to complete a repetition. Hands are push in front for balancing. |

|

Push-upsLegs extended back and balancing the straight body on hands and toes. The arms are flexed to alower and raise the body. Repetitions are counted when the body returns to the starting position. |

|

Dumbbell shoulder pressesFrom a sitting pisition, the weights are pressed upwards until the arms are straight and the weights touch above the head. |

|

LungesOne leg is positioned forward with knee bent and foot flat on the ground while the other leg is positioned behind. The position of the legs is repeatedly swapped. |

|

Standing dumbbell rowsSlightly bent knees, hips pushed back, chest and head up. With elbows at a 60-degree angle, the dumbbells are raised up from the back muscles. |

|

Sit-upsAbdominal exercise done by lying on the back and lifting the torso with arms behind the head. |

|

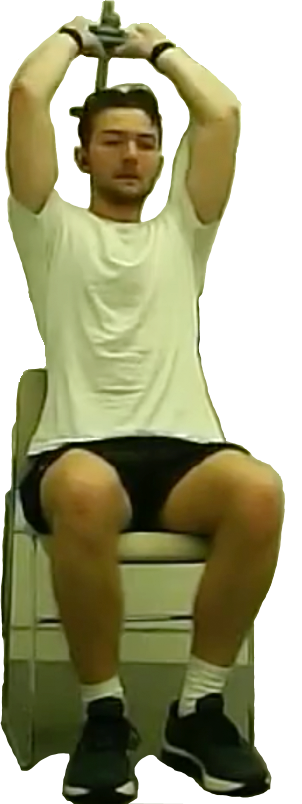

Dumbbell tricep extensionsThe weight is brought overhead, extending the arms straight. Keeping the shoulders still, the elbows are slowly bent, lowering the weight behind the head to where the arms are just lower than 90 degrees, elbows pointing forward. |

|

Bicep curlsBicep curls with weights. Arms are alternated in lifting up the weight with the rest of the body remaining still. |

|

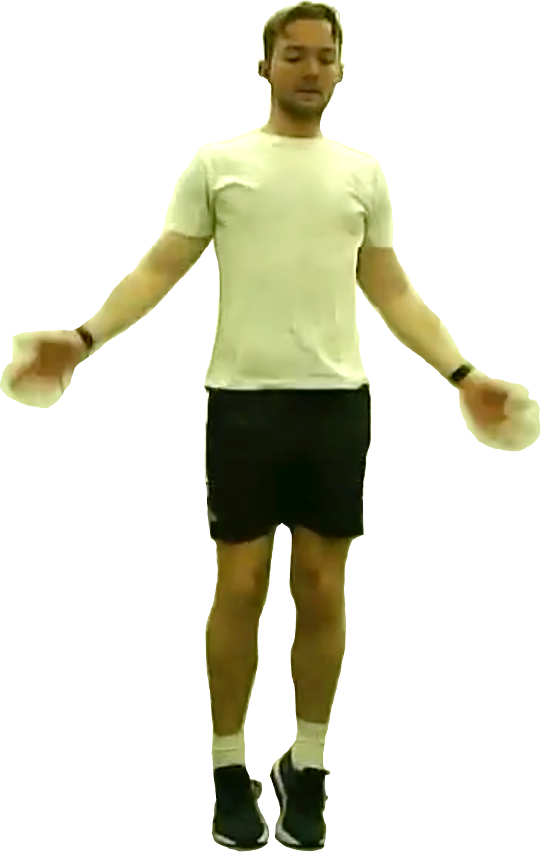

Sitting dumbbell lateral raisesSitting with a dumbbell in each hand and straight back. Slowly lifting the weights out to the side until the arms are parallel with the floor. |

|

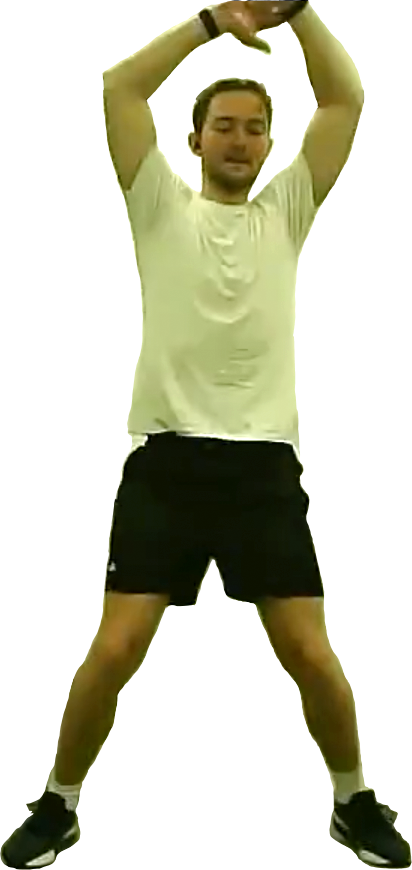

Jumping jacksStarting with the arms on the side and the legs brought together. By a jump into the air, simultaneously the legs are spread and the hands are pushed up to touch overhead. A repetition is completed with another jump returning to the starting position. |